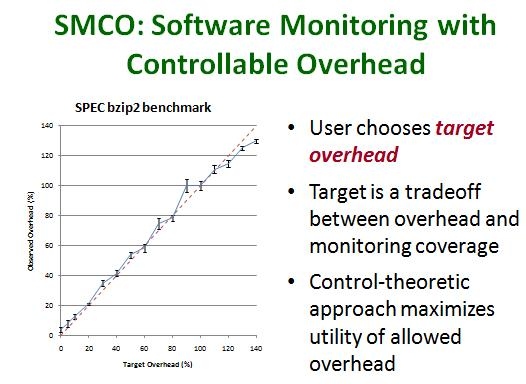

SMCO controls overhead due to runtime monitoring by selectively disabling (and then re-enabling) monitoring. Users can choose exactly what overheads they are willing to accept. SMCO monitors as many events as it can without exceeding the user-specified target overhead, giving the user full control over tradeoff between overhead and monitoring coverage.

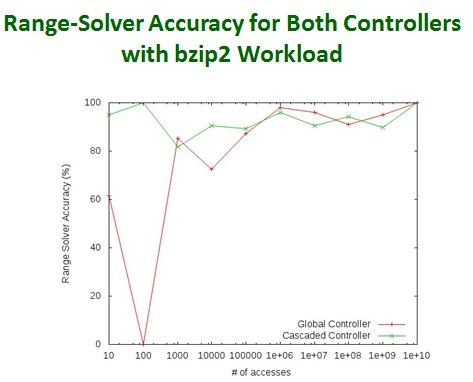

This graph shows the SPEC bzip2 benchmark running with an SMCO-controlled monitor that analyzes the ranges of integer variables. The observed overhead (on the y-axis) closely tracks the user-specified target overhead (x-axis). The dashed red line shows the ideal y = x line.

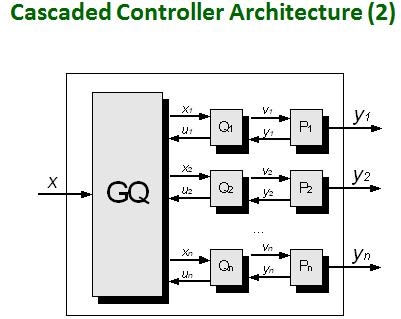

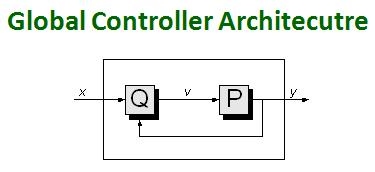

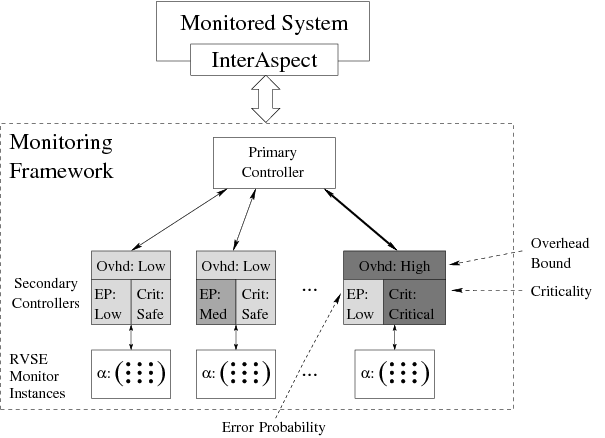

SMCO provides two kinds of controllers. The cascade controller individually monitors overhead from each source of events (each function serves as a source in most applications of SMCO) so that it can fairly divide overhead among these sources. The global controller considers only the total overhead from all monitoring. Because it requires less computation to make control decisions, the global controller can monitor more events than the cascade controller with the same amount of overhead. However, the cascade controller can ensure that events from sources that rarely trigger events do not get drowned out by more prolific sources.

This graph shows the percentage of variable accesses monitored (variables are grouped by how frequently they are accessed). In total, the global controller monitors more events than the cascaded controller with same target overhead because it does not spend time executing relatively expensive cascade controller logic. As a result, it has better accuracy for variables with more than 106 updates. The cascade controller has better accuracy for rarely accessed variables.

Overhead control makes it impossible to exhaustively monitor events for every object in a system. Our first SMCO implementation distributes available monitoring time fairly, but we can get better results by giving priority to objects that are closest to failing.

Adaptive Runtime Verification (ARV) uses a system model to estimate both the probability that an object has experienced an error and the object's expected distance from the error state. The expected distance serves as a criticality measure that ARV uses to choose high priority objects. The overhead control system can choose to provide more monitoring resources to high priority objects.

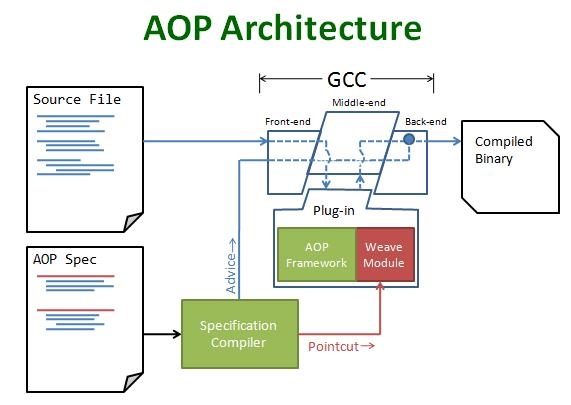

Aspect-Oriented Programming (AOP) is a programming approach which works on aspects by enabling modularization of concerns (secondary or supporting functions isolated from the main program's business logic).

We are developing an API based on the ideas of AOP that is designed to simplify the process of writing compiler plug-ins for performing instrumentation.

The process of writing plug-in with the AOP API is done in the following steps.

The GCC plug-in architecture makes it possible to specify custom compiler passes with the ability to directly modify the compiler's internal representation of the code.

Using calls in our API, the pass can create pointcuts that match specific events in the program source. Pointcuts can be created for events like calls to specified functions or variable assignments.

The plug-in pass can iterate over every events in the pointcut, which are known as join points. For each join point, the pass can insert a call to an advice function. The pass can specify what information to pass to the advice, including information specific to the join point, such as the particular arguments to a function call.