|

When a package uses autotools such as Autoconf, it generally becomes easier to maintain. However, even Autoconf has its limitations. The first goal of this section is to provide a method of evaluating a package's complexity for developers who are using or considering using autotools for that package. Note that we are specifically concerned with complexity concerning the portability of that package to newer operating environments. The second goal of this section is to show the benefits and limitations of using autotools.

Table 1 lists the packages that we evaluated. We picked ten large, popular packages that use autotools, including Am-utils. We also evaluate the Amd package, which is Am-utils before it was autotooled. We evaluated as many versions of these packages as we could find, spanning development cycles of 2 to 9 years. This ensures that our reported results are sufficiently stable, given a large number of versions spanning several years.

Figures 1 through 6, include ``error'' bars showing one standard deviation off of the mean. Since we have evaluated a number of packages and versions for each, the standard deviation accounts for the general variation in size and complexity of the package over time.

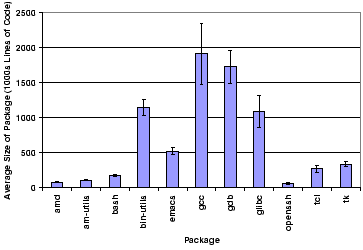

A typical metric of code complexity is a count of the number of lines of code in the package, as can be seen in Figure 1. As we can see, the four largest packages are Binutils, Gcc, Gdb, and Glibc. The number of lines of code in a package is one useful measure of the effort involved in developing and maintaining the package, but may not tell the whole story.

A different measure of the portability complexity of a package is the number of CPP conditionals that appear in the code: #if, #ifdef, #ifndef, #else, and #elif. Each of those statements indicates one extra code compilation diversion. Generally, each of those CPP conditionals account for some difference between systems, to ensure that the code can compile cleanly on each system. In other words, the effort to port a software package to multiple systems and maintain it on those is related to the number of CPP conditionals used.

Figure 2 shows the average number of CPP conditionals per package. Since Autoconf supports conditional whole source file compilations, we counted each of those conditionally-compiled source files as one additional CPP conditional. Here, the same packages that have the most lines of code (Figure 1) also have the most number of CPP conditionals. This is not surprising: as the size of the package grows, so the number of CPP conditionals is likely to grow. This suggests that perhaps neither code size nor absolute number of CPP conditionals provide a good measure of code complexity.

Next, we combined the above two metrics to provide a normalized metric of code complexity for the purposes of portability and maintainabilty of code over multiple operating systems. In Figure 3 we show the average number of CPP conditionals per 1000 lines of code. We notice three things in Figure 3.

First, the difference between the most and least complex packages is not as large as in Figures 1 and 2. In those two, the difference was as much as an order of magnitude, whereas in Figure 3 the difference is a factor of 2-4.

Second, the standard deviation in this figure is smaller than in the first two. This means that although larger packages do have more CPP conditionals, the average distribution of CPP conditionals is almost fixed for a given package. The implication of this is also that a given package may have a native (portability) complexity that is not likely to change much over time and that this measure is related to the nature of the package, not its size.

The third and most important thing we notice in Figure 3 is that the packages that appear to be very complex in Figures 1 and 2 are no longer the most complex; leading in average distribution of CPP conditionals are Am-utils and Bash. To understand why, we have to understand what makes a software package more complex to port to another operating system. For the most part, languages such as C and C++ are portable across most systems. The biggest differences come when a C program begins interacting with the operating system and the system libraries, primarily through system calls. Although POSIX provides a common set of system call APIs [3], not all systems are POSIX compliant and every system includes many additional system calls and ioctls that are not standardized. Furthermore, although the C library (libc) provides a common set of functions, many functions in it and in other libraries are not standardized. For example, there are no widely-standardized methods for accessing configuration files that often reside in /etc. Similarly, software (e.g., libbfd in Binutils) that handles different binary formats (ELF, COFF, a.out) must function properly across many platforms regardless of the binary formats supported by those platforms.

Despite their large size, Binutils, Gcc, Gdb, and Glibc--on average--do not use as many operating system features as Bash and Am-utils do. For example, Gcc and Binutils primarily need to be able to read files, process them internally (parsing, linking, etc.), and then write output files. Most of their complexity exists in portable C code that performs file parsing and target format generation. Whereas Gcc and Binutils are large and complex packages in their own right, porting them to other operating systems may not be as difficult a job as for a program that uses a wide variety of system calls or a program that interacts more closely with other parts of the running system. (In this paper we do not account separately for package-specific portability complexities: Gcc and Binutils to new architectures, Am-utils to new file systems, Emacs and Tk to new windowing systems, etc.)

For example, Bash must perform complex process and terminal management, and it invokes many system calls as well as special-purpose ioctls. Amd (part of Am-utils) is a user-level file server and interacts heavily with the rest of the operating system to manage other file systems: it interacts with many local and remote services (NFS, NIS/NIS+, DNS, LDAP, Hesiod); it understands custom file systems (e.g., loop-device mounts in Linux, Cachefs on Solaris, XFS on IRIX, Autofs, and many more); it is both an NFS client and a local NFS server; and it communicates with the local host's kernel using an asynchronous RPC engine. By all rights, an automounter such as Amd is a file system server and should reside in the kernel. Indeed, Autofs [1,4] is an effort to move the critical parts of the automounter into the kernel.

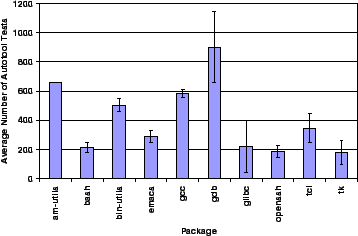

Before building a package, it must be configured. The number of Autoconf (and Automake and Libtool) tests that a package must perform is another useful measure of the complexity of the package. The more tests performed, the more complex the package is to port to another system, since the package requires a larger number of system-discriminating features.

|

Figure 4 shows the average number of tests that each package performs. The figure validates some of what we already knew: that Binutils, Gcc, Gdb are large and complex. But we also see that Am-utils performs more than 600 tests: only Gdb performs more tests on average. This confirms that Am-utils is indeed a complex package to port, even though its size is more than ten times smaller than Gcc or Gdb.

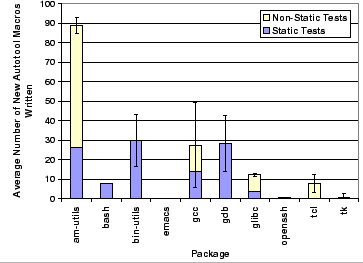

Since Autoconf comes with many useful tests already, we also measured how many new Autoconf tests the package's maintainers wrote. The number of new macros that are written shows two things. First, that Autoconf is limited to what it already supports and that new macros are almost always needed for large packages. Second, that the number of new macros needed indicates that a given package may be more complex.

Figure 5 shows the average number of new M4 macros (Autoconf tests) that were written for each package. As we see, most packages do not need more than 10 new tests, if any. Binutils, Gcc, and Gdb are more complex and required about 30 new tests each. Am-utils, on the other hand, required nearly 90 new tests. According to this metric, Am-utils is more complex than the other packages we measured because it requires more tests that Autoconf does not provide. Indeed this has been true in our experience developing and maintaining Am-utils: many tests we wrote try to detect kernel features and internal behavior of certain system calls that none of the other packages deal with (for example, how to pass file-system-specific mount options to the mount(2) system call).

|

Figure 5 also shows the portion of static macros that were written. As we described in Section 3.1.4, these are macros that cannot detect a feature 100% deterministically and are often written as a case statement for different operating systems. In other words, these tests cannot use the power of Autoconf to perform automated feature detection. As we see in Figure 5, most packages need a few such static macros, if any. Again, Am-utils takes the lead on such static macros. The main reason for this is that a reliable way to test such features is impossible without superuser privileges and knowledge of the entire site (i.e., the names, IP addresses, and exported resources of various network servers).

The conclusion we draw in this section is that although Autoconf continues to evolve and provide more tests, maintainers of large and complex packages may still have to write 10-30 custom macros. Moreover, some packages will always need a number of static macros, for those features that Autoconf cannot test in a reliable, automated way.

In Sections 4.1 and 4.2 we established that Am-utils is a more complex package to port than it appears from looking purely at its size. In several ways, Am-utils represents an upper bound for the complexity of portable C code: of the packages examined it has the most dense distribution of CPP conditionals and the largest number of custom macros. In addition, it performs critical file system services that are often part of the kernel proper. Therefore, analyzing Am-utils in more detail provides more insight into the process of maintaining and porting large or complex packages. Moreover, since we have maintained this code for nearly ten years, we can provide a unique perspective on the history of the development of Am-utils dating back to well before it used autotools.

|

|

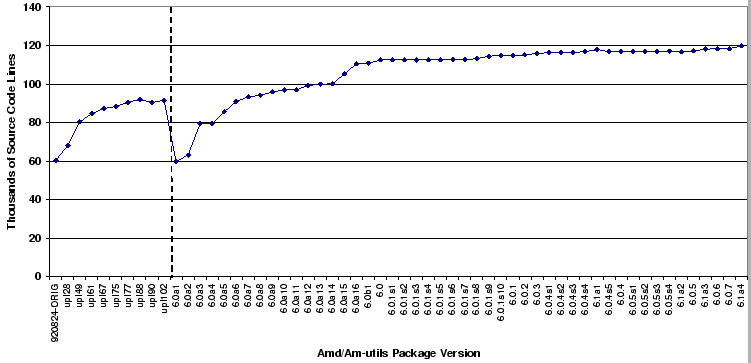

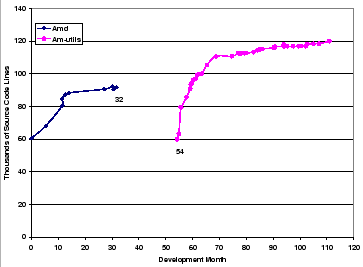

In Figure 6 we see the code size for all released versions of Am-utils. The vertical dashed line separates the autotooled code on the right from the non-autotooled one on the left (before autotooling, the package had a ``upl'' versioning scheme). The most important factor is the drop in code size after autotooling. The last version before autotooling (amd-upl102) was 91640 lines long. The first version after autotooling (am-utils-6.0a1) was only 59804 lines long. The two versions offered identical features and behavior, but the latter used Autoconf, Automake, and Libtool. This drop of 35% in code size was afforded thanks to the code cleanup and simplification that resulted from using the GNU autotools.

Since the release of many versions occurred over a period of nearly ten years, we show in Figure 7 the timeline for each release and the code size since the first release. We can see that for the first 32 months, nearly three years, the non-autotooled package continued to grow in size. However, that growth in size was accompanied by a serious increase in difficulty to maintain the package which hindered the package's evolution; the older code contained a large number of multi-level nested CPP macros, often resulting in what is dubbed ``spaghetti code.'' It took nearly two more years before the first autotooled version of Amd was released. Of those 22 months, about 6 months prior to month 54 were spent learning how to use Autoconf, Automake, and Libtool, understanding the inner working of Amd, and adapting the code to use autotools.Amd was originally written by Jan-Simon Pendry and Nick Williams, not the authors of this paper. Therefore we did not initially understand every part of the original 60432-line code base.

The autotooling effort paid off significantly in two ways. First, the autotooled version was more than one-third smaller. Second, the autotooling process allowed us to add new features to Amd and port it to new systems with minimal effort. For the first 12 months after its initial autotooled release, Am-utils grew by 70%, adding major features such NFSv3 [8] support, Autofs [1] support, a run-time automounter configuration file /etc/amd.conf, and offering dozens of new ports. This growth in size did not complicate the code much, as can be seen from the small standard deviation for Am-utils in Figure 3. The growth rate has reduced over the past two years, as the Am-utils package became more stable and fewer new features or ports were required.

The conclusion we draw from our experiences is that large packages can benefit greatly from using autotools such as Autoconf. Autoconf-based packages are easier to maintain, develop, and port to new systems.

Autotooling a package bears a build-time performance cost. Before compiling a package, configure must run to detect system features. This detection process can consume a lot of time and CPU resources. Therefore, Autoconf supports caching the results of a configure run, to be used in later invocations. These cached results can be used as long as no changes are made to the system that could invalidate the cache, such as an OS upgrade, installation of new software packages, or de-installation of existing software packages.

We measured the elapsed time it took to build a package before and immediately after it was autotooled. We used the last version of Amd before it was autotooled (upl102) and the first version after it was autotooled (6.0a1) because these two included functionally identical code. We ran tests on a number of different Unix systems configured on identical hardware: a Pentium-III 650MHz PC with 128MB of memory. We ran each test ten times and averaged the results. The standard deviations for these tests were less than 3% of the mean.

Table 2 shows the results of our tests. We see that just compiling the newly autotooled code takes more than twice as long. That is because the autotooled code includes long automatically generated header files such as config.h in every source file--despite the fact that the autotooling process reduced the size of the package itself by one-third. Worse, configuring the newer Am-utils package alone now takes more than 100 seconds. However, after that first run, re-configuring the package with cached results runs four times faster.

If we consider the worst-case overall time it takes to build this package, including the configuration part without a cache, then building Am-utils-6.0a1 is nearly five times slower than its functionally-equivalent predecessor, Amd-upl102.

Through this work, we identified five limitations to GNU autotools. First, developers must be fairly knowledgeable in using these autotools, including understanding how they work internally.

Second, building code that was autotooled often takes longer than non-autotooled equivalents. However, given ever-increasing CPU speeds, this limitation is often not as important as ease of maintenance and configuration.

Third, developing code with autotools requires using a GNU version of the M4 processor. Also, Autoconf depends on the native system to provide stable and working versions of the Bourne shell sh, as well as sed, cpp, and egrep among others. Even though such tools come with most Unix systems, they do not always behave the same. When they behave differently, maintainers of GNU autotools must use common features that will work portably across all known systems.

Fourth, although autotools support cross-compilation environments which further helps the portability of cross-compiled code, Autoconf generally cannot execute binary tests meant for one platform on another. This limits Autoconf's ability to detect certain tests that require the execution of binaries.

Last, developers may still have to write custom tests and M4 macros for complex or large packages. Developing, testing, and debugging such tests is often difficult since they intermix M4 and shell syntax.